AI agents vs traditional automation is not just a buzzword comparison in 2026 – it is the core strategic question for any team serious about productivity, scalability, and competitive advantage. For more than a decade, businesses have relied on scripts, macros, RPA bots and workflow engines to automate work. That era is not over, but it is about to be fundamentally redefined.

In 2026, AI agents are moving from experimental side projects to first-class automation citizens. Instead of rigid flows that break when a field name changes, agents can reason, adapt, and orchestrate multiple tools to achieve a goal. Instead of automating one narrow task, they can own entire workflows: research, decisions, actions, and reporting.

This guide explains why 2026 is the breakpoint year where many companies will start shifting from traditional automation to agentic systems – and how you can make that transition deliberately instead of chaotically.

What Are AI Agents in 2026, Really?

Before comparing AI agents vs traditional automation, we need a precise working definition. “Agent” is one of the most abused words in the AI ecosystem.

In this guide, an AI agent is:

- A system powered by an LLM or similar model

- With an explicit goal or task rather than a single prompt

- That can call tools, APIs, or other services

- That can observe its environment through results, state, or feedback

- That can plan, replan, and loop until it reaches a stopping condition

For a deeper technical definition of intelligent software agents, the IBM overview of AI agents is a widely referenced resource in enterprise AI and automation discussions.

Key Capabilities of Modern AI Agents

In 2026, serious AI agents usually share several traits:

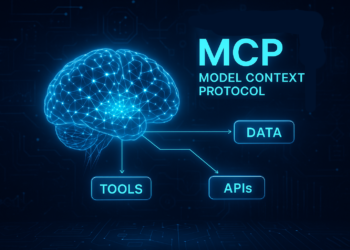

- Tool use: Ability to call external tools through protocols like MCP, APIs, or function calling.

- Memory: Short-term task memory plus access to longer-term knowledge (vector stores, knowledge graphs, or internal docs).

- Planning: Ability to break a goal into steps, execute them, and adjust the plan when something fails.

- Multi-modal I/O: Text, code, sometimes images, logs, or structured data.

- Looping and self-checking: Agents can run multiple steps, verify progress, and refine results.

If you are building or evaluating AI agents today, it is worth reading a dedicated guide such as your own

Agentic Workflows Beginner Guide and pairing it with standards like the

Model Context Protocol (MCP) to understand how tools and context are exposed safely.

What Counts as Traditional Automation?

On the other side of the AI agents vs traditional automation comparison are systems that pre-date today’s LLM stacks. Traditional automation includes:

- Scripts and cron jobs: Shell, Python, PowerShell, etc.

- Macros: Excel macros, keyboard macros, UI scripting.

- RPA (Robotic Process Automation): UI-based automation using tools like UiPath, Automation Anywhere, Blue Prism.

- BPM / workflow engines: Tools like Camunda, Airflow, n8n, Zapier, Make, or custom orchestration services.

For background on classic process and automation foundations, many regulated organisations still rely on frameworks and guidance from the NIST Computer Security Resource Center when designing compliant workflows.

All of these share the same fundamental property: they are rule-based and deterministic. They follow explicit instructions that humans design. If the environment changes in a way the rules did not anticipate, they break.

Strengths of Traditional Automation

Traditional automation is not obsolete. It still has major advantages:

- Predictability: Given the same inputs, you get the same outputs.

- Compliance and auditability: Easy to map rules to policies.

- Performance: Scripts are fast and resource-efficient.

- Maturity: Many organisations already have RPA or workflow teams.

In other words, if you know exactly what should happen and it rarely changes, traditional automation is hard to beat.

Limitations of Traditional Automation

However, the limitations become obvious as complexity grows:

- Brittleness: A small UI change can break an RPA bot.

- High maintenance cost: Every new exception requires new rules.

- Limited reasoning: Scripts cannot decide what to do in novel situations.

- Poor adaptability: Scaling to messy, semi-structured data is painful.

This is where AI agents enter the picture.

AI Agents vs Traditional Automation: Core Differences

To understand why 2026 is the breakpoint year, you need a clear mental model for how AI agents vs traditional automation differ at a systems level.

| Dimension | Traditional Automation | AI Agents |

|---|---|---|

| Decision Logic | Hand-written rules, flows, conditions | Learned policies, LLM reasoning, planning |

| Adaptability | Low – changes require developer work | High – can adapt to new inputs and formats |

| Input Types | Structured data, fixed UIs | Unstructured text, logs, documents, semi-structured data |

| Failure Handling | Explicit error branches | Reasoning loops, self-correction, fallbacks |

| Scope | Single task or narrow process | End-to-end workflows, multi-tool orchestration |

| Authoring | Developers or RPA specialists | Developers + domain experts prompting and configuring agents |

In short, traditional automation is great at running a known script. AI agents are better at figuring out what script to run, in what order, with which tools.

Why 2026 Is the Breakpoint Year

So why not 2023, 2024, or 2025? Several forces converge in 2026 to make AI agents vs traditional automation a practical, not theoretical, choice.

1. Models Are Finally Good Enough at Planning

Early LLMs hallucinated constantly and struggled with multi-step reasoning. In 2026, frontier models and strong open-source models are much better at:

- Breaking goals into tasks

- Handling long contexts

- Using tools iteratively

- Self-critiquing and refining outputs

This makes real-world agents viable for more than toy demos.

2. Tooling Standards Have Emerged

Standards like the Model Context Protocol (MCP) and mature function-calling APIs make it much easier to connect agents to tools, databases, and internal systems without building a snowflake integration for each use case.

This allows teams to treat “tools for agents” as reusable infrastructure, not throwaway glue code.

3. Economics Favour Agents for Complex Work

Traditional automation is cheap for simple tasks but becomes expensive when adding branching, exception handling, and multi-system integrations. In contrast, AI agents are more expensive per token or call, but:

- Replace large amounts of integration code

- Handle messy inputs without new rules

- Scale more gracefully to new workflows

When you calculate the total cost of ownership, AI agents vs traditional automation becomes a serious trade-off – and 2026 is when that equation starts favouring agents for many medium-complexity processes.

4. Organisations Are Ready

By 2026, many teams already experimented with chatbots and copilots. They understand the risks and limitations. The next logical step is: “If a model can assist a human, can it also act on behalf of a human within guardrails?”

This mental shift – from assistant to agent – is driving pilot programs across support, ops, finance, and marketing teams.

Where Traditional Automation Still Wins

Even in 2026, you should not replace everything with AI agents. There are domains where traditional automation remains the best choice.

1. Highly Repetitive, Fully Structured Tasks

Examples:

- Batch ETL pipelines with stable schemas

- Nightly billing exports

- Static report generation from well-defined SQL

If the process is essentially the same every night and rarely changes, a workflow engine or cron-driven script is cheaper and simpler than an agent.

2. Hard Real-Time Systems

LLMs are not suitable for strict real-time constraints. If you must respond in microseconds, you need traditional code, not an AI agent.

3. Regulatory Workflows with Zero Tolerance for Ambiguity

Where every branch must be explicitly approved and auditable, deterministic flows may be non-negotiable. Agents might assist human reviewers but not be allowed to fully automate decisions.

Where AI Agents Beat Traditional Automation

On the other hand, there are scenarios where insisting on traditional automation is a strategic mistake.

1. Messy, Multi-System Workflows

Think of a support agent resolving a complex customer issue:

- Reading multiple tickets

- Checking logs

- Reviewing account history

- Cross-checking documentation

- Drafting a tailored reply

Trying to encode this as a fixed RPA flow is painful. Agents shine in exactly these messy workflows.

2. Knowledge-Heavy Processes

Whenever tasks depend heavily on reading, interpreting, and synthesising unstructured content – PDFs, policies, knowledge bases – agents backed by RAG pipelines and MCP-exposed resources are far more capable than brittle scripts.

3. Dynamic, Evolving Business Logic

In fast-moving markets, product, pricing, and messaging change constantly. Automations hard-coded into workflows need continuous updates. Agentic systems with configurable goals and prompts adapt more easily.

Hybrid Patterns: AI Agents Orchestrating Traditional Automation

The smartest teams in 2026 do not treat AI agents vs traditional automation as an either/or choice. Instead, they build hybrid architectures where:

- Agents handle reasoning, planning, and orchestration

- Traditional automation handles fast, deterministic operations

For example:

- An AI agent analyses an incident, identifies the likely root cause, and decides to run a remediation playbook.

- The actual remediation steps are implemented as scripts or workflow actions.

- The agent calls those scripts, monitors their outputs, and prepares a human-readable incident report.

This pattern allows you to reuse existing automations while layering intelligence on top.

Reference Architecture: 2026 Agentic Automation Stack

A typical architecture that blends AI agents and traditional automation includes:

- LLM layer: One or more models (hosted or self-hosted) with strong reasoning capabilities.

- Tooling layer: Tools exposed via MCP, APIs, or internal connectors.

- Orchestration/agent layer: The agent runtime that manages goals, plans, and tool calls.

- Automation layer: Existing scripts, RPA bots, workflow engines, and scheduled jobs.

- Data & context layer: Vector stores, warehouses, document stores, logs, and metrics.

- Observation & control layer: Dashboards, logs, audit trails, approvals, and kill switches.

When you implement this stack correctly, “AI agents vs traditional automation” becomes “AI agents controlling a fleet of deterministic automations”.

Step-by-Step Migration: From Scripts to Agents

Here is a practical roadmap for teams in 2026.

Step 1: Inventory Existing Automation

List your cron jobs, RPA bots, workflow engines, and macros. For each, capture:

- Business owner

- Systems touched

- Frequency

- Failure rate and maintenance overhead

Step 2: Identify “Agent-Friendly” Workflows

Look for processes that are:

- Cross-system

- Knowledge-intensive

- Frequently changed

- Currently done by humans despite attempts to automate

These are top candidates for AI agents.

Step 3: Expose Tools via a Standard Protocol

Instead of letting each agent talk to APIs directly, expose your tools through a protocol like MCP or a standard internal gateway. This keeps security and governance centralised and reusable.

Step 4: Start with a “Human-in-the-Loop” Phase

Do not flip fully autonomous modes on day one. Start with agents drafting work, summarising, proposing actions, and routing for human approval.

Step 5: Gradually Automate Low-Risk Actions

As you gain confidence, allow agents to perform certain actions autonomously within guardrails – e.g. re-running a failed report or restarting a non-critical service.

Step 6: Measure Impact and Iterate

Track:

- Time saved per workflow

- Error rates

- Escalations to humans

- Incidents caused by automation

Use these metrics to justify expanding agent coverage – or to pause and harden the system where needed.

Deep-Dive Example: Turning a Manual Workflow into an Agentic Flow

To make AI agents vs traditional automation concrete, let’s walk through a realistic transformation inside a SaaS company’s support team.

Original Manual Workflow

Before automation, a senior support engineer:

- Reads a long support ticket thread

- Checks the customer’s plan, billing status, and usage limits

- Logs into dashboards to inspect recent errors and logs

- Searches internal documentation for known issues

- Writes a personalised response with next steps

This can easily take 20–30 minutes for complex tickets.

Traditional Automation Attempt

A traditional automation approach might:

- Trigger a workflow when a “high priority” tag is added

- Fetch account details via API

- Run a pre-defined log query

- Post a summary in Slack or a ticket comment template

This saves time, but breaks whenever fields change, and still leaves most of the reasoning and writing to humans.

Agentic Workflow Version

With AI agents, you can design a flow like this:

- Agent is triggered when a ticket meets certain criteria (severity, ARR, churn risk).

- Agent uses tools (via MCP or internal APIs) to:

- Retrieve all related tickets and interactions

- Fetch account, billing, and usage data

- Pull recent logs and error traces for that user or tenant

- Search the knowledge base for similar incidents

- Agent analyses the combined context and drafts:

- A root-cause hypothesis

- Suggested remediation steps

- A customer-ready explanation in the right tone

- A human support engineer reviews, edits if needed, and approves the response.

Over time, as confidence grows and guardrails mature, more of this workflow can be delegated to the agent, while still using traditional automation for stable, low-level tasks like log collection and data retrieval.

Tooling Landscape: Platforms for AI Agents vs Traditional Automation

When comparing AI agents vs traditional automation, you are also comparing their ecosystems.

Traditional Automation Stack

- RPA tools: UiPath, Automation Anywhere, Blue Prism

- Job schedulers: cron, Airflow, Prefect

- Integration platforms: Zapier, Make, Workato, n8n

These are battle-tested but assume that humans define flows precisely.

Agentic and LLM-Centric Stack

- Agent frameworks: LangGraph, AutoGen-style orchestration, custom agent runtimes

- Tooling protocols: Model Context Protocol (MCP), function calling, tool registries

- RAG infrastructure: vector DBs, document pipelines

- Observability: agent tracing, replay tools, policy engines

Many teams are discovering that the best strategy is to plug their existing scripts, workflows, and RPA bots into this new stack as tools – instead of trying to replace everything in one go.

90-Day Roadmap to Pilot AI Agents in Your Organisation

If you are serious about exploring AI agents vs traditional automation, here is a pragmatic 90-day rollout plan.

Days 1–30: Discovery and Foundations

- Audit existing automation and identify top candidate workflows

- Set up an LLM provider or self-hosted model with tool-calling

- Choose a protocol (e.g. MCP) or gateway to expose tools

- Define success metrics with stakeholders (time saved, ticket resolution, etc.)

Days 31–60: First Agent Pilot

- Implement 1–2 agents for narrow but meaningful workflows

- Keep humans in the loop for all actions

- Instrument detailed logging and monitoring

- Gather feedback from operators and domain experts

Days 61–90: Scale and Harden

- Expand agent capabilities and tool coverage

- Automate low-risk actions end-to-end

- Formalise ownership and operational runbooks

- Decide which additional workflows should move from traditional automation toward agents

This staged approach prevents the “big bang” failure mode and lets you compare AI agents vs traditional automation using real KPIs, not hype.

KPIs & Metrics for Measuring Agentic Automation

To defend investments in AI agents vs traditional automation, you need hard numbers. Useful metrics include:

- Time to complete workflow: Before vs after agents.

- Human touches per workflow: How many manual steps remain?

- Error or escalation rate: How often do agents require human intervention?

- Coverage: Percentage of eligible workflows using agents.

- Maintenance hours: Time spent maintaining agents vs scripts or RPA bots.

Over a few months, these metrics will reveal where AI agents truly outperform traditional automation, and where classic scripts are still the right tool.

Buying vs Building: Should You Use Off-the-Shelf Agent Platforms?

Another decision point in the AI agents vs traditional automation debate is whether to:

- Build your own agent stack, or

- Adopt vendor platforms that provide “agent-as-a-service”.

Reasons to Build

- Deep integration with internal systems and security

- Full control over data residency and compliance

- Customised workflows that match your domain precisely

Reasons to Buy

- Faster time to value

- Less infrastructure overhead

- Built-in observability, templates, and guardrails

For many teams, a hybrid approach works best: start with a vendor platform to learn, then gradually bring critical or sensitive agent logic in-house.

Looking Ahead: 2027–2030

By 2030, the “AI agents vs traditional automation” debate will probably sound like arguing about “web apps vs desktop apps” did in 2010. The reality will be:

- Most businesses run a mix of agents and deterministic automation.

- Agents orchestrate many workflows end to end.

- Traditional automation handles infrastructure-level, high-reliability tasks.

- Standards like MCP, shared tool registries, and policy engines tie everything together.

If you start now, 2026 can be the year you build a coherent agent-plus-automation strategy – instead of a patchwork of scripts and disconnected pilots.

FAQ: AI Agents vs Traditional Automation

Are AI agents going to replace all traditional automation?

No. Traditional automation is still ideal for stable, structured, and high-reliability tasks. AI agents excel where workflows are messy, cross-system, and knowledge-heavy. The future is hybrid.

How do AI agents access tools and internal systems?

Most serious implementations use a tooling layer or protocol – such as MCP or custom gateways – to expose tools, APIs, and data sources safely. The agent never has raw, uncontrolled access to your entire environment.

Is it safe to let AI agents take actions autonomously?

It can be, if you design for safety: least-privilege access, clear scopes, human-in-the-loop for high-risk actions, strong logging, and the ability to override or halt agents quickly.

Do I need a separate team for AI agents?

Not necessarily on day one, but as adoption grows, most organisations benefit from an agent platform function responsible for standards, tooling, security, and governance across teams.

How should I start if I only have scripts and no AI yet?

Begin by inventorying your automation, exposing existing scripts and workflows as tools, and piloting a single well-chosen agentic workflow with human review. You do not need to “rip and replace” everything to start benefiting from AI agents.