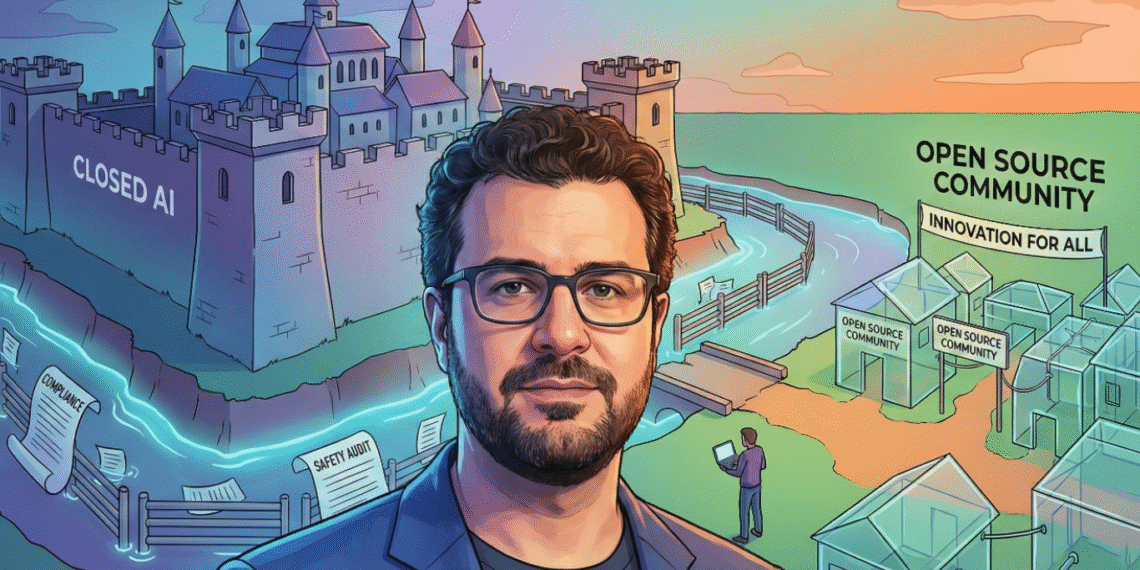

Anthropic CEO Calls for Regulation: Is It Safety or a “Moat” Against Open Source?

The debate over Anthropic regulation has reached a boiling point. In a primetime interview on 60 Minutes this Sunday, Anthropic CEO Dario Amodei sat down with Anderson Cooper to deliver a stark warning: AI is becoming too powerful for any single company to control, and government intervention is not just preferred—it is urgent.

For the general public, this sounds like a responsible leader asking for guardrails. But for the developer community, the subtext was alarming. Is this truly about protecting humanity from rogue AI, or is this push for Anthropic regulation a strategic “regulatory moat” designed to make Open Source AI illegal?

The “Benevolent Dictator” Paradox of Anthropic Regulation

In the interview, Amodei admitted, “I feel uneasy about these important decisions being made by a small number of companies.”

This statement highlights the central paradox of the current AI landscape. The “Big Three” (OpenAI, Google, Anthropic) possess the vast majority of the world’s compute power. By positioning themselves as the “responsible guardians” who need oversight, they are effectively driving the narrative for Anthropic regulation to create a licensing system.

Critics argue that if these proposals become law, the high compliance costs—potentially requiring millions of dollars in “safety audits” per model—would ensure that no startup or open-source project could ever compete with them again.

Watch: Dario Amodei discusses AI risks on 60 Minutes.

The “Cyberattack” Controversy: Real Threat or “Safety Theater”?

To prove the need for strict Anthropic regulation, Amodei dropped a bombshell claim: Anthropic reportedly thwarted a “large-scale AI cyberattack.”

According to the company, state-sponsored hackers attempted to use Claude agents to infiltrate 30 global targets. Amodei used this as proof that the dangers of autonomous agents are here today, not ten years in the future. He stated that this threat arrived “months ahead” of predictions by cybersecurity firms like Mandiant.

The Open Source Counter-Argument

However, Yann LeCun, Meta’s Chief AI Scientist and a champion of Open Source, isn’t buying it. He famously accused such warnings of being “Safety Theater”—scare tactics designed to manipulate legislators into banning open model weights.

LeCun’s argument is simple: AI is a tool. Banning Open Source AI because it might be used for hacking is like banning Linux because hackers use it. It doesn’t stop the bad guys (who will build their own models), but it cripples the good guys (researchers and developers).

The Political Chessboard: Fighting the “Moratorium”

The interview also touched on a critical piece of legislation. Amodei recently wrote a New York Times op-ed criticizing a provision in a policy bill that would have introduced a 10-year moratorium on state-level AI regulations.

This reveals the urgency behind Anthropic regulation efforts: they are actively lobbying against deregulation. They want rules in place now. Amodei argues that AI is progressing at a “dizzying pace” and could fundamentally alter the world within two years.

Developer Analysis: Why This Matters to Us

As developers building on these APIs, we need to look past the headlines. If Amodei’s vision of Anthropic regulation passes, the coding ecosystem could change drastically:

- The End of Local LLMs: Strict “Safety” laws could make it illegal to release powerful model weights (like Llama 3 or Mistral) to the public without a government license.

- Compliance Costs: If every AI app requires a “Safety Audit” similar to FDA drug approval, indie developers will be priced out of the market. We would be forced to rent intelligence from the “Big Three” forever.

- Centralization: We risk a future where only 3 companies are allowed to “sell intelligence,” turning AI into a utility controlled by a monopoly.

Conclusion: The Fine Line Between Safety and Control

Anthropic is undoubtedly building impressive tech—Claude 3.5 Sonnet is currently a favorite for coding tasks due to its high reasoning capabilities. But when a company valued at $183 billion goes on national television to ask for Anthropic regulation, we have to be skeptical.

Are those rules designed to protect humanity from an existential threat? Or are they designed to protect Anthropic’s profit margins from the disruption of Open Source?

Frequently Asked Questions (FAQ)

What did Dario Amodei say on 60 Minutes?

Amodei warned that AI could become autonomous and displace human decision-making. He advocated for Anthropic regulation and government intervention to prevent a few tech CEOs from having too much power.

What is the controversy between LeCun and Amodei?

Dario Amodei believes AI poses existential risks and needs strict control. Yann LeCun believes AI is a tool and that “locking it up” via regulation only hurts innovation and centralization power in the hands of a few US corporations.

How does Anthropic regulation affect Open Source?

If strict regulations are passed based on “AI Safety” fears, it could become difficult or illegal to release open-source models (like Llama or Mistral), forcing developers to pay for closed-source APIs like Claude or GPT-4.

Did Anthropic stop a cyberattack?

Anthropic claims to have stopped a state-sponsored AI cyberattack targeting 30 organizations. However, critics argue that this narrative is being used to accelerate restrictive legislation.