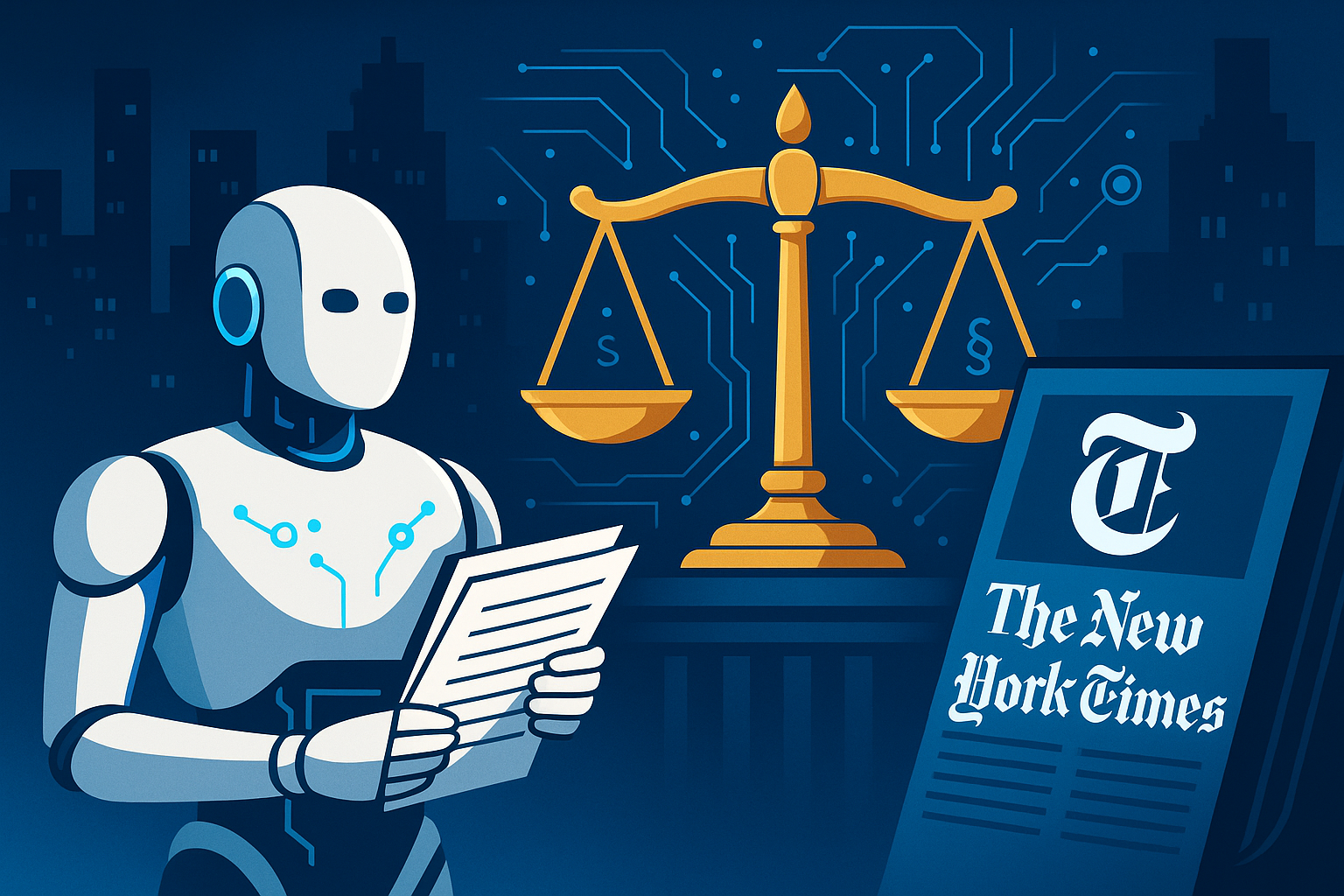

Perplexity AI lawsuit: The New York Times has filed a major case against AI search startup Perplexity that could redefine how AI companies use copyrighted content and how publishers are paid for their work.

In its complaint, the Times accuses Perplexity of copying, summarising and repackaging large volumes of Times reporting without permission. What looks like a dispute between one publisher and one AI company is quickly turning into a landmark test for modern AI business models built on web data. The case is also becoming one of the most important AI copyright battles heading into 2026.

How the Perplexity AI Lawsuit Could Change AI Search

The New York Times filed the Perplexity AI lawsuit in federal court in New York, arguing that Perplexity built core parts of its AI search and answer experience on Times journalism without a licence or proper compensation. According to the complaint, Perplexity allegedly:

- Accessed and reused Times articles at scale, including content behind the paywall.

- Generated AI summaries that closely mirrored the structure and substance of original Times reporting.

- Displayed the New York Times name and branding alongside AI-generated responses.

- Attributed fabricated or inaccurate statements to the Times, mixing AI hallucinations with a reputable news brand.

In simple terms, the Times says Perplexity did not simply “learn from” its material but turned Times journalism into a core ingredient of a commercial AI product — without permission and without payment.

You can read general coverage of the New York Times’ position at

nytimes.com, although the lawsuit focuses specifically on the technical and business decisions made by Perplexity.

Why the Perplexity AI Lawsuit Matters for the Future of AI

On the surface, the lawsuit looks like a traditional copyright case. In reality, it raises deeper questions about how AI models and search engines are built:

- How much copyrighted text can be used without a licence?

- Does using paywalled or protected content cross a legal boundary?

- When does summarisation become reproduction?

- How dangerous is it when AI hallucinations are attached to a respected brand?

A strong win for the Times could push AI companies toward licensed, controlled datasets. A win for Perplexity could reinforce broad interpretations of fair use and increase pressure on lawmakers to update copyright rules.

Key Allegations Against Perplexity, in Plain Language

1. Systematic Reuse of Times Journalism

The Times claims Perplexity used its articles as direct fuel for the product, not incidental training data. Users are seeing AI answers that mirror Times reporting—often without ever visiting the Times website.

2. Accessing Paywalled and Protected Content

The lawsuit alleges that paywalled and restricted material was accessed and reused. If true, this makes it harder to argue fair use or normal indexing.

3. Hallucinations Using the NYT Brand

Perplexity reportedly generated content the Times never wrote while linking that material to the Times brand. This raises issues of trademark misuse and editorial reputational harm.

4. A Business Model Built on Unlicensed Content

The Times frames Perplexity’s approach as a deliberate business strategy: building a commercial AI product on unlicensed journalism from publishers who were never consulted or compensated.

What Developers and Startups Should Learn from the Perplexity AI Lawsuit

Even if you’re not building an AI search engine, the lawsuit is a major warning signal. Many AI tools depend on scraped text, documentation and news content for training or retrieval.

Practical lessons for builders:

- Track dataset provenance. Know what your models and pipelines are trained on.

- Respect paywalls and robots.txt. Treat restrictions as legal protections.

- Avoid misleading brand usage. Do not imply endorsement by publishers.

- Expect licensing costs. AI will increasingly require paid content agreements.

If you are experimenting with AI agents or workflows, use content you legally control. Our

agentic workflows beginner guide explains how to build systems that rely on internal or user-provided data.

For broader AI legal risks and model governance concepts, see our

guide to agentic AI systems.

Impact on Users and the General Public

For everyday users, nothing changes immediately. Perplexity remains online while the lawsuit moves forward. But the outcome could quietly influence how AI tools behave over the next few years:

- AI search may become more cautious about quoting publishers.

- Advanced features may shift behind paywalls due to licensing costs.

- Transparency rules may require clearer source attribution.

Part of a Broader Wave of AI Copyright Cases

The lawsuit is part of a larger trend. Multiple news organisations are challenging how AI models use journalistic content. Other AI firms already face lawsuits involving training data and model outputs.

This case is different because it targets an AI answer engine that stands directly between readers and publishers. If courts rule such tools replace journalism rather than support it, AI search could face major design changes in 2026 and beyond.

The Road Ahead: Possible Outcomes of the Perplexity AI Lawsuit

- Settlement and licensing: Perplexity could sign a licence, setting a template for other AI firms.

- Win for the Times: Courts could narrow fair use for AI systems.

- Win for Perplexity: Broader fair use could strengthen AI firms but push lawmakers toward updated copyright laws.

Whatever the outcome, the lawsuit confirms that “move fast and scrape things” is no longer a safe strategy. AI companies must manage copyright, licensing and legal accountability—alongside model accuracy and user experience.

FAQ: NYT’s Perplexity AI Lawsuit

What is the New York Times accusing Perplexity AI of doing?

The Times alleges Perplexity copied, reused and monetised its journalism at scale without permission, including paywalled articles, and sometimes presented AI-generated content as if it were Times journalism.

Does the Perplexity AI lawsuit affect other AI companies?

Yes. A ruling on fair use will influence how all AI vendors handle data pipelines, licensing and compliance.

Is it still safe to build AI products using web data?

It can be safe, but developers must understand where data comes from, whether it’s legally accessible and whether outputs risk misleading users.

What should developers and founders do now?

Respect content restrictions, avoid brand misuse, track dataset origins and plan for licensing costs as a normal part of AI development.

The Bottom Line

NYT’s Perplexity AI lawsuit is more than a conflict between a newspaper and a startup. It tests the limits of current copyright law and how far AI companies can stretch it. The ruling will shape how AI agents, search tools and answer engines operate in 2026—and determine who gets paid.

Analysis: For AI teams, legal risk now sits alongside model performance and user experience. If your product depends on other people’s content, assume that one day you may need to explain every design decision in front of a judge.